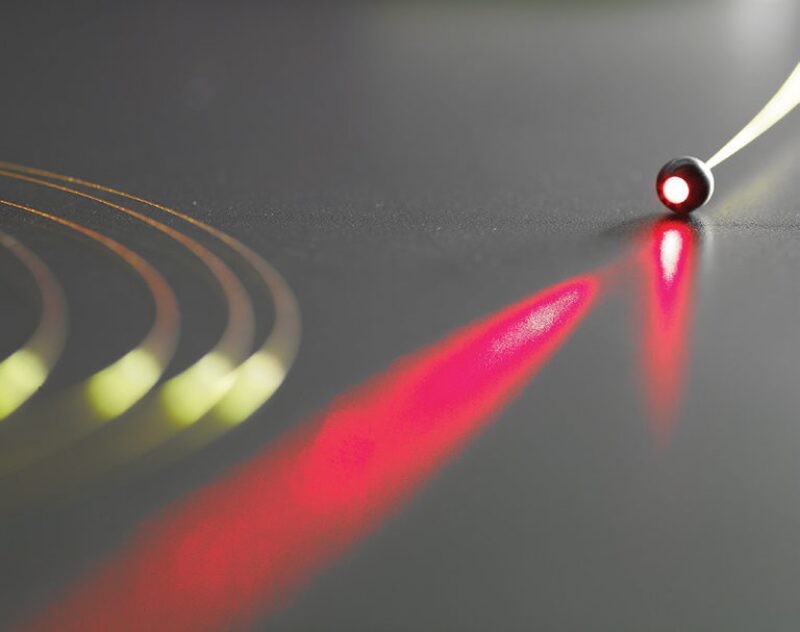

It has been an impressive comeback for a technology that once stood on the brink of failure. The upstream oil and gas industry has largely resolved crippling technical challenges that shortened the life of fiber-optic cables in downhole applications and is now working on a big encore. The developers of the technology have been successful in showing operators what can be gained by using permanent fiber-optic monitoring in a small number of wells. The next step is to broaden the market by expanding what the technology can do.

A number of research and development projects aimed at maturing new applications for fiber-optic systems are in progress. Taken together, they stand to improve the return on investment for operators as the technology slowly takes the shape of a central nervous system for oil and gas wells. Put simply, those working in the fiber-optic sector want to provide engineers with real numbers to enable managed production strategies that will increase overall production.

The most widely used form of fiber-optic technology today is by far distributed temperature sensing (DTS) and thus its development is relatively mature. Initially, many of Schlumberger’s customers viewed DTS as a niche product. But after pumping down more than 17 million ft of fiber-optic cable into more than 1,500 wells, the oilfield service company is experiencing greater global demand than ever, something it credits to better data.

“In the old days, the fiber data was marred by reliability, by fiber darkening, and those kinds of things. The big growth that we are seeing today is really because of the improved reliability, ease of data transmission, and customers having a lot more confidence in the data and being able to use that data,” said John Lovell, product line manager of distributed measurement and completions division at Schlumberger. “Now that we have good data going, you can start moving from a qualitative assessment to much more of a quantitative assessment.”

However, for all the benefits that downhole fiber optics is delivering, more breakthroughs are needed to bring about the next evolutionary step of the technology. Multiple companies are working on ways to adapt fiber optics to determine flow characteristics and related physical properties inside the pipe and near the wellbore. Managing the ever-growing sets of data being produced specifically through distributed acoustic sensing (DAS) is an ongoing problem that needs to be solved.

More work is under way to expand the computer systems and applications used by service providers so operators can use competing laser and optical receiving systems, known as interrogator units, and draw out the data in a universal format for interpenetration. Oil and gas companies also need more ways to integrate and correlate the fiber-optic data with conventional production data. And then there is the unresolved issue of how the data should be shared between the service providers and the operators. “These are all good problems to have,” said Zafar Kamal, manager of sensors and systems integration at BP. “I think they will be solved, it is just a question of how efficiently can we do it, how fast we can do it, and who gets there first.”

Demand Rising With Value

The companies watching the rate of adoption accelerate say it is the result of a confluence of technological advancements in the upstream industry that have increased the durability and reliability of downhole fiber-optic systems. Major improvements from the material sciences sector have resulted in glass chemistry that yields strong fiber cables able to withstand harsh well environments and extreme temperatures for years at a time. Leaps in computer processing speed and better algorithms have made the process of interpreting the raw data easier and fast enough to monitor in real time. And the ability to couple a fiber-optic system with valves and production control equipment inside “intelligent” wells means that companies can manage their production and injection operations with greater confidence than before.

For Mikko Jaaskelainen, senior technology manager at Pinnacle, Halliburton’s fiber-optic and laser division, the widespread acceptance of fiber-optic monitoring has been a long time coming. He has worked in the sector for more than 18 years and said up until a few years ago, his company was taking orders for perhaps one, two, or at most three, fiber-optic systems per field. Now, companies are installing tens of units per field and Halliburton is working through a backlog to keep up with rising demand. “We are seeing an order of magnitude increase in regards to uptake by operators as they see the value and learn how to use the data,” Jaaskelainen said.

The industry shift toward more complex and capital-intensive reservoir development projects is a strong indicator that the use of downhole fiber-optic sensors will continue to increase. For expensive deepwater wells, adoption rates for fiber-optic systems are increasing as operators seek to reduce spending on well intervention and gain critical reservoir behavior knowledge.

Because the price tag is still too large to justify installing one inside every well of an onshore field, companies are using fiber-optic systems inside a few producing wells, or injector wells, and then extrapolating fieldwide learnings from that sample. Kamal said that the tendency is to use the technology in high-yield fields and wells—perhaps those producing 10,000 BOEPD or more. “That is where continuous production monitoring becomes of tremendously high value,” he said.

Interoperability is Key

The standardization of oilfield equipment components has been a longtime industry quest. So it is no surprise that a similar push is being made in fiber-optic software technology. The collaboration between the makers and users of distributed fiber-optic systems has resulted in a standardization code for DTS data transferring, known as Production Markup Language (PRODML). The common standards for DTS were first developed in 2008 and a third update is expected to be released to energy companies and their vendors later this year.

The 9-year-old project, led by the Energistics consortium, is aimed not only at fiber-optic standardization, but also a wide range of applications involving upstream software technology. Work on a version of the software language for DAS is ongoing and is described as being in the very early stages. BP is one of the major oil companies that founded the PRODML initiative along with several technology developers and service companies, including Schlumberger and Halliburton.

In addition to being charged with integrating the technology into BP’s operations, Kamal also serves as a PRODML board member at Energistics. He said one of the reasons that a universal protocol is needed is so that companies can standardize data management and its integration into industry standard systems and applications, regardless of location and the vendor delivering the data—a critical step to scaling up the technology and training more workers. “We need to make sure we can use anyone’s fiber, cable, deployment services, interrogation box, and then take that data to whatever expert I want to for the interpretation,” said Kamal. “We are absolutely, totally, committed to making this thing as open and interoperable as possible.”

The Unconventional Driver

Some of the recent advancements in DTS have been driven by the North American shale revolution. Ten years ago, about 90% of Halliburton’s installations were inside vertical wells. Since then, the majority of installations are in horizontal wells as North American producers switched their focus to liquid-rich shale plays. Inside a natural gas well, the temperature differences are easily detected with DTS because of the cooling effect of dry gas, a natural phenomenon known as the Joule-Thomson effect. But in oil producing wells, the thermal differences are much smaller, which means that the accuracy of the measurements must be enhanced.

To overcome the challenges presented with horizontal oil wells, Jaaskelainen said that Halliburton developed a new line of DTS technology about 10 times more accurate than the generation of systems developed for vertical wells. “That translates into re-engineering and optimizing the systems,” he said. “This is what the shift from gas to liquid-rich plays has meant to us.”

Blending and Fingerprinting

Enabling data blending has become another focus for developers, including Baker Hughes, that see it as a way to further enhance artificial lift and improved oil recovery methods, such as steam-assisted gravity drainage and chemical injections. Combining and correlating the fiber-optic data with more conventional data sets, such as wellhead gauge readings and logging data, will provide operators with even more clarity about the state of their well.

To get there, operators will need to share more of the detailed well information with the company deploying the fiber-optic technology. As the value of this data becomes increasingly apparent, questions arise over who should own or control the data. “You need contextual information from other sources in order to really understand at a deep level what is happening in the well,” said Tommy Denney, a product line manager of production decision services at Baker Hughes. “Historically, that information is not made available to the service companies that are going to be producing this fiber-optic data.” How an information-sharing system would be managed and by whom, he added, has yet to be worked out.

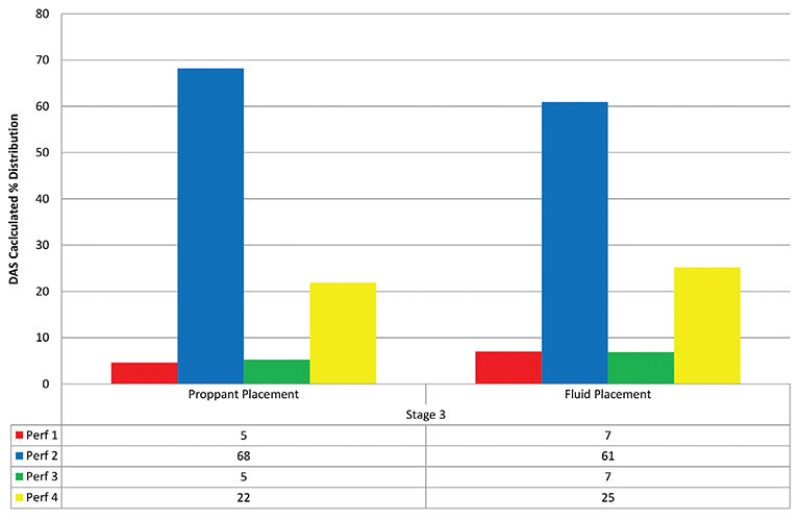

Companies are looking for other ways to extract more value from the fiber-optic data by finding sounds that measure injection or production performance, such as the volume of proppant placed in a particular stage during a hydraulic fracturing job or where water might be intruding into the wellbore in the production stage. This is known as fingerprinting. One of the challenges that developers have found with fingerprinting involves the repeatability of the events. Lovell of Schlumberger said that fingerprinting will reduce the amount of raw data that has to be transmitted, but the industry still needs more data and time to distinguish reliable signals from the noise. “If you find a fingerprint that works most of the time, but then 10% of the time it does not, then it is very hard to automate based on that,” he said.

As work continues on fingerprinting, there remains the problem of how to decide which company is in charge of this critical information: the operator, the service company, or the analytics company. The answer will likely be the company that has the most data and the most contextual information about the various applications. “This is an area where (intellectual property) obviously becomes a bit of an impediment,” said Denney of Baker Hughes. “Because whoever can crack the fingerprint for these events can really streamline the way they handle this data.”

Looking for Quantitative Measurements

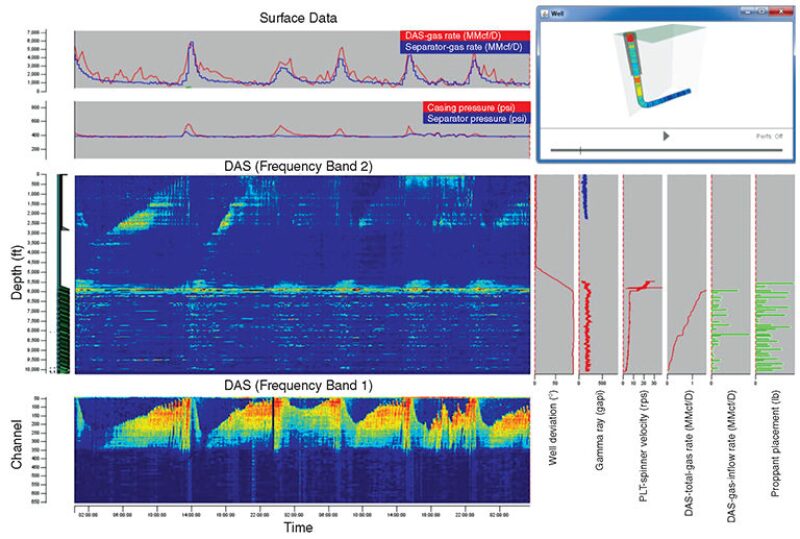

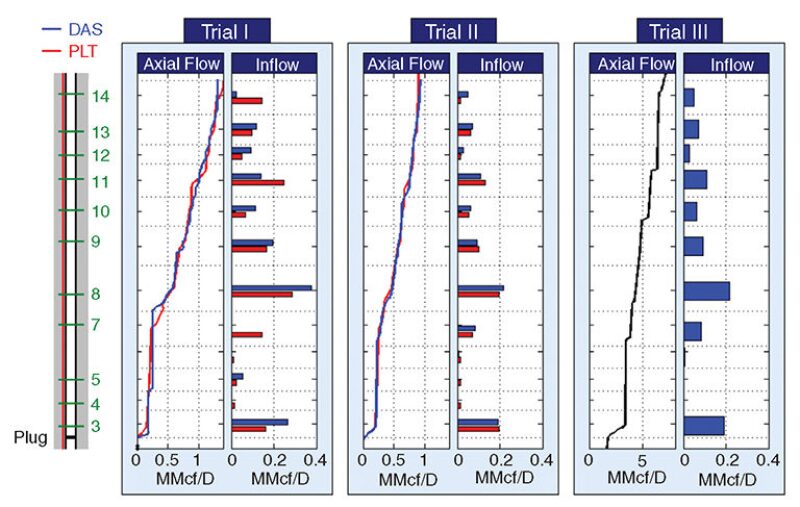

Despite the flood of newly acquired fiber-optic well data, operators want more precise information about what is happening downhole than what the current technology offers. Today most DTS and DAS systems are limited to offering a descriptive, or qualitative, observation. There is value in qualitative data; however, engineers prefer to work with numbers, not descriptions. With quantitative measurements, companies will know more about which part of the well is the most productive, or which perforations are producing the most water, and how much. Technology developers know operators want this and are closing in on the goal.

Lovell said that in the near future, engineers using Schlumberger’s DAS systems should be able to see on their computer screens the properties of the actual produced fluids and track their velocity, which is information that can be tied to production optimization decisions. “Instead of making plots of raw temperature, or raw noises, (the system) will be making plots of the answers that we are getting from that,” he said. This will be possible, Lovell explained, because the accuracy of the data has increased over the past few years as the problem of hydrogen darkening, which decreased temperature readings, has largely been solved.

Another yet to be realized quantitative application is flow profiling. Baker Hughes is working on different methods to accomplish this using DTS and sees great potential for flow profiling applications inside so-called “intelligent well systems” that are equipped with valves for production control. Using flow profile data, “you can choke back valves in particular zones if your data is telling you that you are getting too much water from that area, and you can increase flow in others if it is a productive zone,” said Roger Duncan, product line manager of optical monitoring at Baker Hughes.

With DTS systems capable of flow profiling installed in a large enough set of wells in a field, “then you can really start to understand how the reservoir is behaving in a much more dynamic sense,” said Kevin Holmes, manager of the fiber-optic systems product line at Baker Hughes.

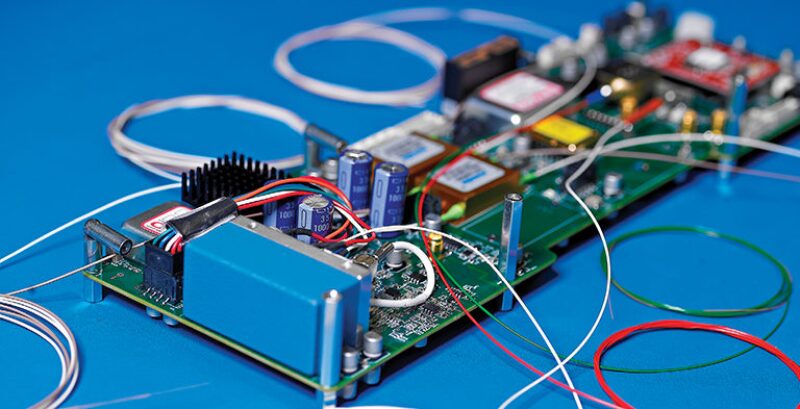

OptaSense, a subsidiary of the United Kingdom-based defense contractor QinetiQ, is developing quantitative solutions for DAS. The company has incorporated algorithms authored by its largest oil and gas clients into the surface interrogator equipment to quantitatively determine the flow of oil and gas. Each day, a package of data containing flow metrics and parameters is uploaded from the well location to a remote server via a satellite uplink. “This means we have reduced the amount of data that needs to be managed,” said Andrew Lewis, a managing director at OptaSense. “That has been a key issue.”

Acoustic Data Challenge

On another front, the industry is working to overcome the data management challenges presented by DAS, which delivers so much data in a short period of time that storing all of it in stacks of hard drives has become a tiresome headache for many companies. Conventional pressure gauges generate small amounts of data per day, perhaps a megabyte at most. A DTS unit is capable of streaming out gigabytes of data each day, enough to fill a thumb drive or two. But DAS is in a league of its own, creating terabytes of information over the course of just a few hours. During a typical hydraulic fracturing monitoring operation, a DAS system may generate around 5 TB a day, or with ultrasensitive systems 1 TB an hour. “That is a huge, huge challenge,” said Kamal of BP. “And I have not yet seen a solution that will get the problem solved.”

Without a purpose-designed data management system to handle this workload, Kamal said that today’s operators are using a patchwork of software solutions to extract and compress the large data sets from DAS. To help analyze the data, BP plans on using its 2.2-petaflop supercomputer at its center for high performance computing in Houston that went online late last year. It is already using the supercomputer for seismic interpretation and reservoir modeling and there are plans to increase the computer’s processing speed.

OptaSense is dealing with the data management problem by trying to take it off the minds of its customers. Many oil and gas companies prefer to keep DAS information to themselves, considering it proprietary. But OptaSense said that its handling of the data was driven by customers’ demands to reduce their workload and provide just the facts they needed in a simple report. “They did not want to have three or four hard drives worth of data and then have to get someone to spend time going through it,” said Lewis. “Once they have seen that we have proven the relationship between the measurements we make to reality, they no longer wanted to see the raw data.”

For example, in a fracture monitoring operation, OptaSense provides its customers with a report detailing information such as the amount of proppant successfully placed into each set of perforations. The company keeps the raw data as a backup and eliminates the need for data management by the customer. OptaSense is working on a new software product to analyze, visualize, and automatically extract key findings in the DAS raw data. In the spirit of collaboration, the company is considering making the software an open format to allow for its use with DAS data acquired by others. “I think that probably is the right move for the industry,” Lewis said.

Under Interrogation: Where the Magic HappensOvercoming Hydrogen Darkening